Now that generative AI tools like ChatGPT are available, classwork is bound to change as a result. How do we preserve academic integrity? What's cheating, and what's OK? Here are some ideas.

Artificial intelligence-powered tools are becoming more and more widespread.

As they have grown, educators have started to see a change coming. We wonder ...

- What traditional teaching practices will need a slight adjustment? Or an overhaul?

- How do we preserve student thinking and student skill development?

- What should we consider "cheating"? What's considered responsible use?

- How do we prepare students the their future -- one where AI's a part of it?

One of the hardest parts is envisioning the future of classwork. If artificial intelligence can write essays, what place do essays play in the future of learning? How will other examples of classwork change?

How should we adjust? Should we adjust?

There's one reality we need to grasp: AI isn't all good or all bad. AI tools are just that: tools. It's all in the way we use them.

So ... how do we use them? Can they be used to promote solid student learning?

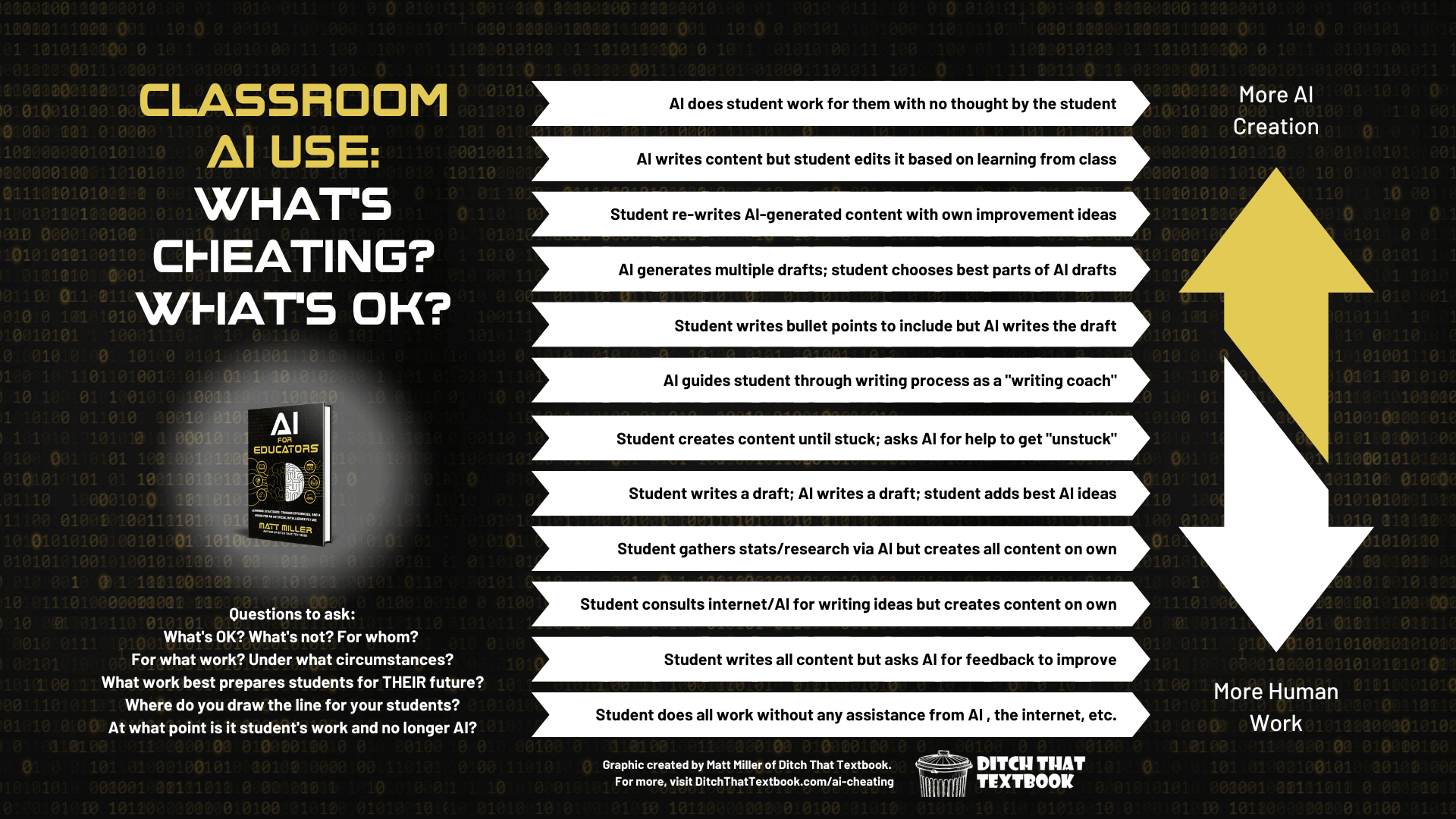

A classwork spectrum: From "all AI" to "all human"

Early in 2023, I created a slide for presentations I co-presented about ChatGPT/AI and their place in education. It was a spectrum, from "all AI" to "all human."

Every time I gave that presentation, people took pictures of that slide. They shared them on social media. They used the graphic as a discussion piece with fellow teachers and even with students.

You can find the original graphic here.

Based on that response, I've expanded that graphic (below) from six examples to 12 examples.

Full discussions about each of the 12 examples above are available in the collapsible sections below.

The examples in this graphic show that there are shades of artificial intelligence use in classwork. It's not a binary black-and-white case of "it's all bad" or "it's all good."

Granted, many of these examples are very writing-heavy and essay-focused. Here's why ...

- Large language models like ChatGPT are focused on creating text -- and that's the main complexity we seem to be trying to unravel right now.

- Essays -- and writing assignments -- are widespread across lots of types of classrooms now.

- It's a concrete example that many educators can understand and use for considering new ways of doing classwork.

Here's how you might use this graphic:

- Look at examples of classwork your students do now and consider if a variation of one of these would promote student learning -- and prepare your students for a future that AI is a part of.

- Identify where you would draw a figurative line through the graphic on what's acceptable and unacceptable in certain context/circumstances for your class (or a type of work in your class).

- Use it as a discussion piece for conversations with students or teaching staff about acceptable or appropriate use of artificial intelligence in teaching and learning.

- Re-create the graphic with your own examples! Here's an editable copy of the template in Google Slides and in PowerPoint.

Let's discuss: 12 examples of AI-assisted classwork

What should we be aware of with these 12 examples? What are some pro's and con's of each?

The clickable collapsible sections below contain discussions about each of these AI-assisted examples of classwork.

Also, with each example, there's commentary about student transparency -- to what extent students might disclose their AI use and what the implications are.

👇 Click on any section below to expand it and read a discussion about it. 👇

Let’s just say it. This isn’t what we want. If a student uses AI like this to do classwork, there are deeper issues at play. Is the student able to do the work? Does the student feel confident enough to start? Does the student have motivation and reason to do the work?

Also, this isn’t the only way generative AI tools like ChatGPT is being used by students. And it isn’t the only vision for generative AI in learning … but students might not know what a responsible vision looks like unless we help them to see it.

Student transparency: There is none. Student is passing off AI work as their own.

There’s actually some student demonstration of skill and understanding here. Students are making surface-level changes to the AI writing and leaving big ideas in place. If the goal of your assignment is for students to write a coherent, well-organized essay from start to finish with clear communication, this assignment will miss several of those steps.

But if you want to talk about the process of writing, it could work. If you want to evaluate the writing of the AI tool, it could work. This is more about looking at the work writers, creators, and thinkers do than actually doing the work -- and that is beneficial in many situations.

Student transparency: Student discloses that most of the writing is done with AI. This probably only works if student provides the original AI creation and highlights the changes the student made to it -- and why.

This gives the student a heavier hand in the writing, sharing, creating process than the entry above. Instead of making small adjustments, the student develops their own writing voice. They take more ownership of the final message -- instead of just accepting what the AI tool creates. Again, this is a gradual release of responsibility and creation work. This third option in the list (and the two options above) could be empowering steps for emerging writers -- those that feel paralyzed trying to create from scratch from a blank screen with a blinking cursor. It could also be empowering for students learning English as a new language.

Student transparency: Student discloses that most of the writing is done with AI. This probably only works if student provides the original AI creation and highlights the changes the student made to it -- and why.

In previous examples, students count on AI to decide on the final ideas and writing in the assignment. Here, students are picking and choosing the best parts of several AI-generated drafts. This assignment increases the complexity of the decision-making process.

Students make choices about the best content to include. But they also have to remember the writing format they’re using and stick to an appropriate format. (Example: It probably wouldn’t make sense to include three fully-developed conclusion paragraphs. A better choice might be to stick to one -- or to write one that logically takes all the points from the three conclusions into account.)

Student transparency: Student discloses that most of the writing is done with AI, but organization and choices are done by them. Providing the AI-generated drafts with the final product would help preserve academic integrity.

Want to prepare students for the real world and a real future that includes AI? I think we have to seriously consider versions of this example. Why? Because this is an option that lots of adults really, really, really like.

(It’s fair to say “but the adults have developed their thinking and writing skills and can skip to this step -- while students still need to develop those skills.” There’s something to that. But we also shouldn’t force students only to do work that’s inauthentic to what is expected -- and commonly used -- in the real world, either. We need balance.)

How do we further boost student skills through an assignment like this? Encourage them to create better and better lists of bullet points -- and to test them to see if those improved bullet points get better drafts written by AI. Also, encourage students to improve on the draft created by AI, adjusting it for clarity, tone, personal writer’s voice, appropriateness for audience, etc.

Student transparency: Student discloses that the draft was created by AI with the guidance of ideas/points provided by the student. Student provides bullet point list of ideas, AI draft, and even summary of changes made to the AI draft.

This example is a big shift. It’s the one where the pendulum swings from “mostly written by AI” to “mostly written by human.” For that reason, lots of teachers will want to rush from the previous steps to this point. But if we rush too quickly and neglect the important scaffolding of skills, students will feel stuck and unable to do even this work -- and make hard decisions about how to do work they’re not equipped to do.

This step is based on having a tool that can serve as a writing coach. More and more large language AI models with natural language processing will arrive in the K-12 education space. And some of those models will be trained in best practices for writing and communicating clear thought. (For example, Khan Academy’s Khanmigo already has a version of this.) Until then, other large language model AI assistants (like ChatGPT, Bard, Bing, etc.) can do this to some extent if you prompt them carefully.

In this example, instead of doing the thinking and writing work for them, AI guides students through the writing process. It prompts them to brainstorm ideas, to prioritize them, to list reasons why they’re important, to create an introduction to provide context, etc.

Student transparency: This will depend on teacher preference and how heavily the AI coaches the student. Student could disclose how much the AI coached them and even provide a transcript of the interactions with the AI model. Or the student could claim that they wrote it themselves. (In the real world, at some point, even responsible adults don’t have to cite all of the supports they used. A professional wouldn’t submit a resume with a disclaimer: “Created with the aid of a career coach.”)

This example is maybe the most vague and nebulous one in the list. What do you consider “stuck”? We all know there are varying levels of stuck. In my own writing work, there are times my mind is tired and I need a break … and there are times where I really don’t know what to write next. Both of them make me feel “stuck,” but a level of persistence (or a quick break) will get me through mental fatigue.

If students understand the importance of grit, tenacity, and persistence -- and you feel that your students will follow through accordingly -- maybe this is a good option for them. It’s definitely a good example of how artificial intelligence can support and assist human intellect in a symbiotic way.

Student transparency: This will depend on teacher preference and how heavily the AI coaches the student. Student could disclose how much the AI coached them and even provide a transcript of the interactions with the AI model. Or the student could claim that they wrote it themselves. (See “in the real world” point above.)

All of the responsibility of creation has been laid on the shoulders of the student at this point. In this example, artificial inteligence serves as a point of comparison afterward -- and one that can help refine and edit the student work.

When I was writing my book AI for Educators, my friend and fellow author Holly Clark was writing her book, The AI Infused Classroom. We did a version of this activity: we wouldn’t read each other’s work until our own work was complete. That way, we wouldn’t let it influence our own work -- consciously or subconsciously. However, the classroom example of this lets the student improve their work with the AI draft -- a skill that is relevant and responsible in today’s world and in the future.

Student transparency: This might depend on how much the student’s work is influenced by the AI draft. If the student makes minor changes, they might not need to cite the influence AI had on their work. With major changes, asking the student to reflect on the changes and why they made them could be beneficial for everyone. If the AI draft is part of the assignment, the student could turn it in with the student-created work.

In a way, this is the real world work of a researcher. Researchers gather information. They analyze it. They figure out what’s important. Then, they find a coherent, clear way to communicate their findings to benefit the reader. If AI helps you write lesson plans as a teacher, you’re doing a form of this. You’re responsible for the final product, but AI can assist you in getting there.

Of course, the big caveat here is accuracy. AI is notorious for inaccurate information, statistics, and claims. Fact-checking is crucial. Honestly, it has always been crucial. Consider the mantra of the journalism profession: “Even if your mother says it, check it out.” Maybe AI’s inaccuracies will help us focus in on verifying information and information literacy more than ever.

Also, you might not consider AI a primary source in the first place. We don’t let students count Wikipedia and Google as sources of information. They’re ways to find sources. They’re summaries of what the source said. An AI tool like ChatGPT isn’t the actual source itself; they can be helpful for understanding and improving our work, but it isn’t an actual source. (That’s my take, at least.)

Student transparency: Student could provide a transcript of stats/research collected with AI; however, it may be more precise for the student to create a bibliography with the sources that the AI model draws from instead.

This is like going to Pinterest for home decorating ideas. It’s like looking up recipes online. It’s like mimicking your favorite YouTuber in a video -- or your favorite singer or artist or author. Referencing the internet for ideas is something we do every day. Ideas are everywhere. It’s up to humans to capitalize on them. Of course, there are real world lessons to be learned about “citing your sources” and when to do it. But then again, there are also real world implications to understanding when it isn’t necessary. Blues artists don’t preface every song by saying “I love the blues because of BB King, so he gets the credit.”

An important question in this process: is there a benefit to limiting the student to only seeking inspiration from AI? The answer here might be “yes,” and you might have your reasons. Just be sure that the reasons benefit the student -- and prepare them for a world where AI is present and used in lots of walks of life.

Student transparency: Student might not need to cite AI if it was only for ideas and inspiration. Teacher might ask student to supply a transcript of AI-generated ideas for discussion of the writing/creation process.

This is really the bare minimum in my opinion. Spell check, autocorrect and Grammarly (among others) all use artificial intelligence to improve our writing. They’re a first line of defense that we ask students to employ every time they turn something in to us. Even if students aren’t using a large language AI model like ChatGPT (or another AI writing tool), they should at very least use spell check and a grammar checker.

Lots of writers use AI tools to improve individual passages, paragraphs, and even entire written works. An AI model can take best practices in writing and make suggestions to align writing with them. The big question students and teachers should ask, though: Are the AI suggestions in line with what students have been taught in class? Because there are lots of ways to write -- and different ways to interpret the world -- the one an AI model suggested might not line up with what students have been taught by the teacher.

Student transparency: We don’t ask students to disclose that they’ve used spell check, grammar check, or autocorrect. Unless you want to have a conversation about the process of creating, student likely doesn’t need to do anything.

Sure, we can all agree that this is the one entry in the list where all teachers would agree: this isn’t cheating. But let’s be honest. When we ask students to do work without any assistance from AI or the internet, a few issues arise:

We probably aren’t preparing them in a relevant way to live in the real world of their future.

Some are probably going to use the internet or AI even if we tell them they can’t.

We may need to check our own motivations for asking them to do this kind of work. They may be rooted in our own misperceptions of what will truly help students to thrive in this world.

If our reasoning for this kind of work is “I want to know what’s in their head” or “I want to know what they can do on their own,” we’re kind of disconnected from the real world. To the first point, it’s extremely rare that the real world demands that we do something without the support of the internet and/or artificial intelligence. To the second point, doing work completely in a silo -- disconnected from other people or sources of support or information -- is also extremely rare.

If there’s resistance to this kind of work, we need to examine our own motivations instead of the motivations of those that are resisting.

Student transparency: Not really applicable. There’s no way a student can transparently prove that they weren’t supported by AI or the internet.

exciting

When it comes to finding the best digital marketing agency in Bangladesh

, Byreached Agency stands out as the best digital marketing agency in Bangladesh. With a proven track record of delivering exceptional results, Byreached Agency has established itself as a leader in the digital marketing landscape of Bangladesh. Their commitment to excellence, innovative strategies, and unwavering dedication to their clients set them apart in a crowded industry

Thank you so much for sharing!!! AI is changing Education!

This is excellent, even for an AI newbie like me! Would love to hear what the standard is for citing AI. No clue at all.

Thank you so much for putting this together!

I’m so enthused!